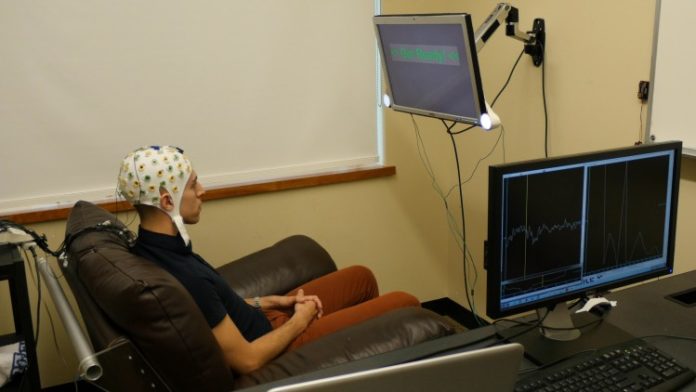

A University of Washington graduate student wears an electroencephalography (EEG) cap that records brain activity and sends a response to a second participant over the Internet. (Photo Credit: UW)

Imagine being able to communicate with others through only your thoughts. No words, no signs are exchanged: only pure information traveling directly from one brain to another.

Of course, that is the stuff of dreams and science-fiction flicks: in the real world, the closest that scientists have come to establishing direct communication between brains involves an extremely convoluted apparatus and would take hours to transmit the amount of information you typically exchange in a 2-minute conversation. Nevertheless, research on these brain-to-brain interfaces (BBIs), as they are called, is valuable because it might one day allow patients with brain damage who cannot speak to communicate using other means. In a recent PLOS ONE report, University of Washington researchers Dr. Andrea Stocco, Dr. Rajesh Rao and colleagues expand on previous research to demonstrate that BBIs can actually be used to solve problems, albeit in the narrow sense of the experimental laboratory.

“Guess what I’m thinking about”

In the experiment, Rao and colleagues built upon previous research from their lab and others to design the brain-to-brain interface. Two participants played a game of “guess what I’m thinking about”, in which the inquirer (the one doing the guessing) asked “yes-or-no” questions to the respondent (the one doing the thinking about). In scientific experiments, the number of parameters must often be kept as low as possible, and this one was no exception: the responder had to think of one object among 8 in a predetermined category (for instance, “dog” among 7 other animals), and the inquirer, who knew the list of objects but ignored which one was selected by the respondent, could only ask three predetermined “yes-or-no” questions (e.g. “Does it fly?”). It is in the way the responder’s answers were communicated to the inquirer that the brain-to-brain interface kicked in.

From brain to brain via EEG and magnetic pulses

To indicate his or her answer, the respondent directed his or her gaze to either of two LED lights, one flashing at 13 Hz coding for “yes”, the other flashing at 12 Hz for “no”. The respondent’s brain responded to the flashing light at the corresponding frequency, and that cerebral activity could be picked up reliably and decoded in real-time by an EEG system. The “yes-or-no” answer was then transmitted to the inquirer’s brain using a transcranial magnetic stimulation (TMS) machine. TMS allows stimulating the cerebral cortex non-invasively by sending sharp magnetic pulses through the scalp and skull, which in turn briefly change the activity of neurons in a given patch of cerebral cortex. When applied to the visual cortex at the back of the head, TMS pulses trigger the perception of brief flashes of light called phosphenes. Here, Rao and colleagues simply controlled the intensity of the TMS pulses so that a “yes” answer would reliably induce the perception of a phosphene by the inquirer, whereas a “no” answer would not.

Not yet at the speed of thought

Again, one cannot overemphasize how clumsy the whole system was, and how slow: the respondents needed to fixate on the flashing LED for up to 20 seconds in order for the EEG decoder to pick up their answer; and the inquirers needed to be trained at detecting phosphenes reliably for 1 to 2 hours before even getting started in earnest. Compare this to the five seconds it would have taken each inquirer to guess which object the respondent was thinking of if they could have talked to each other! Nevertheless, this study established a couple of important points for future research. First, the information was transmitted reliably almost 95% of the time, which is not all that bad (and will certainly be improved upon in further work). Second, the brain-to-brain interface worked in real-time, a prerequisite for its use as a replacement to communication by standard means.

Perspectives for the future

Rao and colleagues mention the possibility that brain-to-brain interfaces could one day be useful to patients who cannot speak following damage to the language centers of the brain (a condition known as Broca’s aphasia). I find the idea fascinating. These patients generally retain most of their intellectual faculties, and would most likely be able to associate a “yes” answer with the color green and “no” with red, for instance. A carefully thought-out classification tree of words, images, and concepts could then be navigated using these “yes-or-no” answers to yield fairly complex ideas. The same technique could potentially be applied to communicate with patients suffering from severe sensorimotor impairments such as locked-in syndrome, a devastating brainstem injury where the individual is unable to move almost every muscle, yet remains conscious. Clearly, we are just as far from mind-reading as we were last year. In the near future, nevertheless, brain-to-brain interfaces will benefit from the fast pace of progress in the fields of neural stimulation and the decoding of neural activity from electrophysiological recordings to become incrementally faster, more reliable and more practical. What we will be able to do then might already make a significant difference.